Can AI threaten Human Survival?

The story of artificial intelligence is a mix of excitement and fear. Artificial intelligence is both our brightest hope and our deepest fear. On one hand, AI brings new discoveries that can transform how we live and work. On the other hand, many people worry: What if, one day, machines become smarter than us and no longer listen to us?

In March 2023, the Future of Life Institute, a global think tank, issued an open letter urging AI companies to pause systems beyond GPT-4 for six months, warning of a reckless race without safety rules. It was signed by Elon Musk (CEO of Tesla and SpaceX), Steve Wozniak (Co-founder of Apple), and Yuval Noah Harari (Historian and Author), and many others.

Soon after, the Centre for AI Safety warned even more strongly:

“The risk of extinction from AI should be a global priority—like pandemics or nuclear war.”

This was backed by Geoffrey Hinton (the ‘Godfather of AI’ and 2024 Nobel Prize winner), Sam Altman (CEO of OpenAI), and Demis Hassabis (CEO and Co-founder of Google DeepMind). When leaders of such stature raise alarms, the world must listen.

Why Experts Feel Uneasy

Why do these brilliant minds feel so uneasy? Because the results have begun to surprise even their own creators. The explosive rise of generative AI has stunned the world with creativity, reasoning, and problem-solving far beyond expectations. Machines are no longer just following commands—they show sparks of human-like intelligence. They now write, pass exams, draft books, compose songs, code, and design images and videos. If the pace continues, will the human brain lose its place as the greatest masterpiece of the universe to an even more astonishing creation—artificial intelligence?

To answer this, we must dive deep. We won’t use heavy math. Instead, we’ll uncover the layers of both brain and Artificial neural networks, one of AI’s most powerful concept, inspired by the human brain—seeing how neurons wire thoughts and how algorithms shape logic. In the end, the answer won’t be handed to us. It will emerge as we journey further.

So, let’s open the hood of both the brain and AI, and begin the exploration.

How the Human Brain works

Imagine a city so big that it never sleeps. Roads twist in all directions, cars honk, signals flash, and information moves every second. That city is your brain. Instead of buildings, it has neurons—tiny living cells, about 86 billion of them.

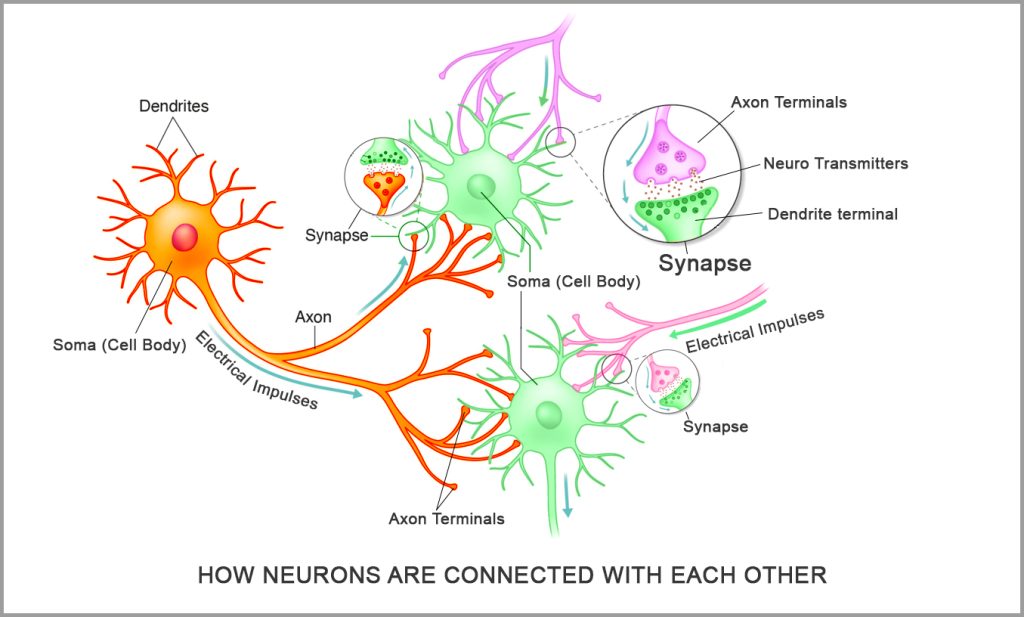

Each neuron usually has three main parts:

- A soma (cell body), like the control centre.

- Dendrites, small branches that receive signals.

- An axon, a long cable that sends signals away.

At the end of an axon are axon terminals, which pass the message to other neurons. Most often the signal travels to dendrites, though it can also reach other parts. Neurons don’t touch directly. Instead, they connect at tiny junctions called synapses. In most cases, a small gap—the synaptic cleft—sits between them, and the message crosses using chemical messengers called neurotransmitters, such as dopamine or serotonin.

The Synapse: The Brain’s True Hero

The synapse is the real hero of the brain. It decides whether a signal is passed on or ignored, and it adjusts its strength with experience. With hundreds of trillions of synapses, this living web gives the brain its unmatched power. Some connections are strong and fast; others are weak and slow. Close ones near the soma have more influence than distant ones.

This endless dance of synapses—strong, weak, fast, slow—creates the music of thought. It is how you learn, remember, imagine, and dream. When you practice the piano, synapses grow stronger; when you forget, they weaken. Practicing a skill makes synapses stronger; forgetting lets them fade. This flexibility, called plasticity, is the secret of intelligence.

So, the brain is not a rigid machine. It is an ever-changing network of neurons and synapses, reshaping itself every moment of life. From childhood to old age, this vast city inside your head writes the story of who you are.

Neural Networks – Machines Inspired by the Brain

Artificial intelligence is vast, but one of its most powerful ideas is neural networks, inspired by the human brain. Many algorithms have been tested over the years, but neural networks proved the most effective, driving today’s most exciting breakthroughs:

- Language models like ChatGPT and Google Gemini

- Image recognition like Google Photos and Apple FaceID

- Generative AI like MidJourney and Synthesia

- Self-driving cars like Tesla Autopilot and Waymo

- Coding assistants like GitHub Copilot and Cursor

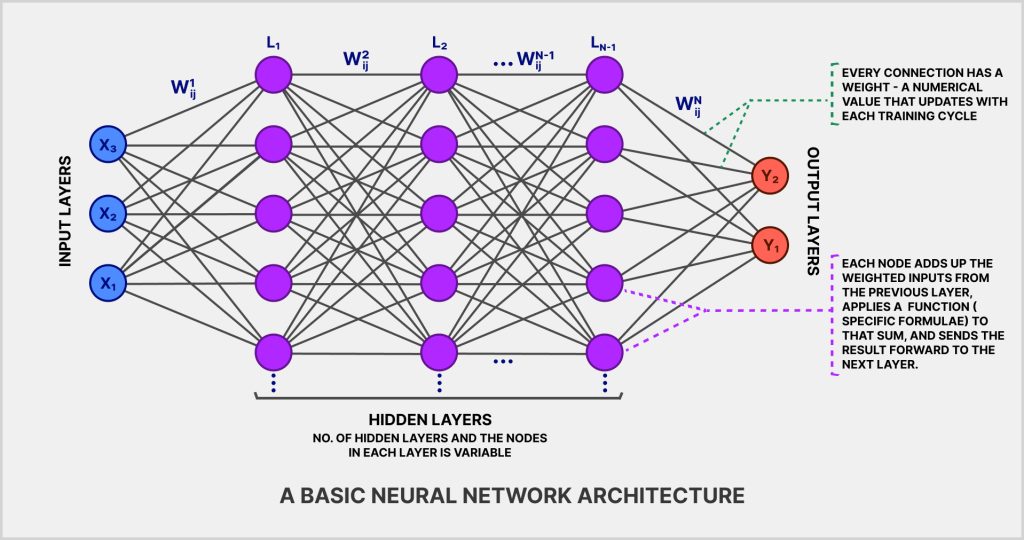

A neural network has many similarities with the brain. The brain has approximately 86 billion neurons connected by synapses. A neural network has nodes connected by weights.

In the brain, synapses become stronger or weaker as we learn. In a neural network, training adjusts weights again and again until the network produces the right answers. Once training is finished, the weights usually stay fixed when the network is used.

Basic neural networks work like a one-way street. Data flows from left to right—input layer → hidden layers → output layer.

Input Layer – Where Data Enters

The input layer is the starting point. Before data enters, it is turned into numbers:

- An image is broken down into thousands of pixel values.

- Text also becomes a list of numerical values via a technique called tokenization and embeddings.

Each node in this layer represents one feature. For example, a 28×28 image has 784 nodes, one for each pixel. The input layer organizes these numbers and passes them to the hidden layers.

Hidden Layers – Where Learning Happens

“The hidden layers do the real work. A network may have only a few hidden layers or sometimes hundreds. Each hidden layer can contain many nodes, depending on the size and purpose of the network. For example, a small network may have 3 hidden layers with 50 nodes each, while a large one may have hundreds of layers with thousands of nodes.”

- Connections: In basic networks, each node links to all nodes in the next layer.

- Weighted Sum: Inputs are multiplied by weights, added together with a bias, and turned into a single value.

- Activation Function: The value is passed through a special formula called an activation function. This formula decides how strongly the node should respond before sending the result to the next layer.

Step by step, the network processes the data and learns patterns. In image recognition, for example, the first layers may detect simple edges, the next layers shapes, and the deeper layers complete objects like faces, cars, or animals.

Output Layer – The Final Answer

The output layer gives the final result. It may be a label like “cat” or “spam mail,” a number such as a price, or in advanced designs, even text, images, or music.

From Basics to Powerful AI

Neural networks can solve simple tasks like classifying photos and also complex ones like translation or driving. This basic form is the foundation. More advanced designs keep evolving, making neural networks one of the most important ideas in AI.

Brain vs Neural Networks, Key differences

Although AI takes ideas from the brain, the two are very different. Let’s look at where the brain still holds an advantage and where machines are catching up.

Parameter Scale – Zillions vs Trillions

The human brain is a masterpiece of complexity. It has about 86 billion neurons and nearly 100 trillion synapses. But each synapse is not just a simple number. It can get stronger, weaker, or change with experience. It also involves signals, timing, and chemicals like dopamine or serotonin. This makes every connection hold many layers of information. If you count all these details, the brain’s “parameters” go into zillions. That’s why it is far beyond anything humans have built.

Artificial neural networks look much simpler. Their parameters are just numbers—weights that get adjusted during training. Once training ends, these numbers usually stay fixed until retrained. Even the biggest models today, with few trillions of parameters, are still far smaller and less rich than the brain. For machines to match it, they would need not just more numbers but also the complexity and flexibility of biology.

More parameters alone cannot create intelligence, but achieving true intelligence does require both a greater number of parameters and richer connections.

Neuroplasticity, Adaptability of the Brain

The human brain can rewire itself by changing the strength and number of connections between neurons. These changes happen mainly at the synapses, the tiny junctions where neurons communicate. When we practice a new skill, some synapses grow stronger, while unused ones may weaken or even disappear. Sometimes, neurons grow new branches that form fresh synapses, creating new pathways.

This ability means that if one path is damaged, another can grow—helping people recover after injury, learn new skills, or adapt to sudden changes.

Neural networks, by contrast, do not rewire on their own. Once trained, they mostly remain fixed unless retrained with new data. Scientists are now exploring approaches like continual learning to help machines adapt more like the brain.

Learning Process: One-Shot vs Big Data

A child may see a dog just once and know it forever. That is brain learning—fast and efficient. Neural networks, however, need millions of dog photos to learn. They depend on heavy data and powerful computers.

Brains learn by experience, adjusting connections gently and continuously. Machines learn through backpropagation, a process of correcting mistakes in bulk. After training, they stay mostly fixed.

Still, AI research is closing this gap. Techniques like reinforcement learning and continual learning try to bring machines closer to brain-like flexibility.

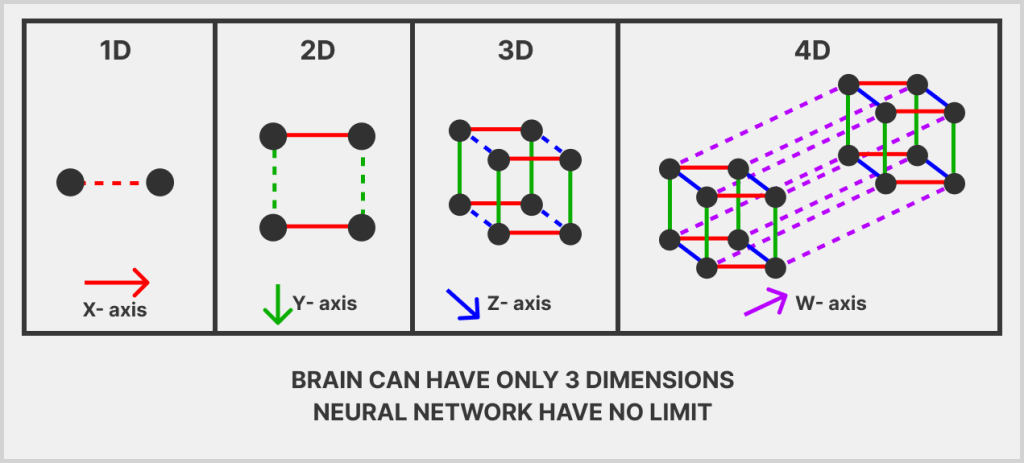

Dimensions: 3D Brain vs 2D Neural Networks

Another difference lies in shape. The brain’s architecture is three-dimensional, with neurons branching in every direction. This 3D wiring gives the brain remarkable flexibility and power, even with the same number of units.

Most common Artificial neural networks, by contrast, are usually built in flat layers, like sheets of paper. However, this is not a fixed limitation. Neural networks can be extended into higher mathematical dimensions. Unlike our physical world, which is restricted to three dimensions, computational models can operate in thousands.

For example, GPT-4 embeddings use 1,536 dimensions, while Google’s BERT-Large represents text in 1,024 dimensions.

It is almost beyond imagination how these models “dance” across thousands of dimensions, capturing meaning in ways humans cannot directly conceive.

Energy Efficiency: Brain’s Dim Bulb vs AI’s Data Centres

The brain runs on just 20 watts—the power of a dim bulb—yet handles roughly a quadrillion (thousand trillion) of operations per second. AI models need giant data canters using megawatts of energy. That makes the brain far more efficient.

AI’s Unique Superpowers (memory, speed, senses, scalability, endurance)

Neural networks already lead the brain in many ways. They can store and process massive amounts of memory far faster than us. In chips, signals are purely electrical and move in nanoseconds. In the brain, signals also travel electrically inside neurons but must cross synapses using chemical messengers called neurotransmitters, which slows them to milliseconds.

Another big edge is in senses. Humans are limited to mainly five—sight, hearing, touch, taste, and smell. ANNs have no such limit. They can take in infrared, ultrasound, radio waves, magnetic fields, or even complex data from satellites and instruments.

They are also easy to copy: once trained, a network can be cloned into millions of machines, while every human must learn from scratch. And unlike us, they don’t need rest, food, or sleep. With speed, memory, unlimited senses, scalability, and nonstop endurance, neural networks already surpass the brain in key areas.

Brain vs Neural Networks: A Feature-by-feature table

| Feature | Brain | Neural Network (AI) |

| Parameters | 86 billion neurons, Hundreds of trillion Synapses | 1-2 trillion parameters |

| Neuroplasticity | Rewires, grows new paths | Mostly Fixed unless retrained |

| Learning | Quick, sometimes with one example | Needs huge datasets, many cycles |

| Dimensions | 3D wiring, branches everywhere | Flat layers, but can adopt multi-dimensional math |

| Energy Efficiency | 20W (like dim bulb) | Megawatts in data centers |

| Memory | Slow, limited | Rich in storing, processing & copying |

| Speed | Slower (electrical + chemical) in milliseconds | Superfast (purely electrical) in Nanoseconds |

| Scalability | Biology-limited | Easily cloned, scaled |

| Endurance | Tires, needs rest | Nonstop, no fatigue |

Conclusion and Summary

So, who will win: the human mind or artificial intelligence?

To answer this, let us imagine three conditions. If they are met, AI could become smarter than humans.

- Matching Brain Complexity: AI systems become as complex as the brain, scaling far beyond today’s trillions of parameters, while solving today’s energy and efficiency problems, AI may rival the brain’s complexity.

- Decoding the Brain: The true challenge is not just building large and complex networks, but understanding how the brain itself solves problems. As science keep on decoding this masterpiece, we may not only copy it but also improve upon it. As Geoffrey Hinton once said:

“I have always been convinced that the only way to get artificial intelligence to work is to do the computation in a way like the human brain. That is the goal I have been pursuing. We are making progress, though we still have lots to learn about how the brain works.”

- Going Beyond Biology: AI can combine the best of both worlds: brain-inspired designs like neural networks combining with innovations beyond biology, such as tokenization, embeddings, and vector databases. Think of airplanes — their wings resemble birds, but instead of flapping, they use engines, a solution nature never invented. Likewise, while today’s AI relies on neural network architectures, the future may bring entirely new technologies not tied to the brain — for example, quantum computing — creating independent paths of intelligence beyond biology.

A Race Still Running

If we succeed in building systems that approaches the brain’s complexity, continue to decode how the brain truly works, and keep inventing beyond biology, then yes—AI could one day become smarter than us. The timeline is unclear, but the direction seems set. This story is not finished. The human brain is nature’s greatest creation so far, but perhaps, just perhaps, an even greater creation—born from our own hands—may be waiting just ahead.

Written By: Puneet Mittal